Elon Musk’s X fails bid to escape Australian fine

Elon Musk’s X fails bid to escape Australian fine Elon Musk’s X on Friday lost a legal bid to avoid a $417,000 fine levelled by

Support Vector Machine (SVM) is a powerful supervised machine learning algorithm used for classification and regression tasks. It’s particularly effective in high-dimensional spaces and for cases where the number of dimensions exceeds the number of samples. In this article, we’ll explore how SVM works, its key concepts, and its applications.

1.1. Hyperplane: SVM works by finding the optimal hyperplane that best separates different classes in the feature space. A hyperplane is a decision boundary that divides the feature space into two parts, one for each class.

1.2. Margin: The margin is the distance between the hyperplane and the nearest data point from either class, also known as the support vectors. SVM aims to maximize this margin, as it leads to better generalization and reduces the risk of overfitting.

1.3. Support Vectors: Support vectors are the data points that are closest to the hyperplane. These points are crucial in defining the decision boundary and determining the margin.

1.4. Kernel Trick: SVM can efficiently perform nonlinear classification by using the kernel trick. This technique maps the input features into a higher-dimensional space where the classes are more easily separable by a hyperplane. Common kernels include linear, polynomial, and radial basis function (RBF).

2.1. Binary Classification: In binary classification, SVM aims to find the hyperplane that best separates the two classes while maximizing the margin. Mathematically, this can be represented as:

argmax�,�(1∣∣�∣∣)subject to ��(�⋅��+�)≥1 for �=1,…,�

Where � is the weight vector, � is the bias term, �� are the feature vectors, and �� are the class labels (+1 or -1).

2.2. Soft Margin: In cases where the data is not linearly separable, SVM uses a soft margin approach. It allows for some misclassification (slack variables) to find a hyperplane with a larger margin. The objective function is modified to penalize misclassifications:

argmax�,�(1∣∣�∣∣)+�∑�=1���

Where � is the regularization parameter that controls the trade-off between maximizing the margin and minimizing the misclassification, and �� are the slack variables.

3.1. Text and Document Classification: SVM is widely used in text and document classification tasks, such as spam detection, sentiment analysis, and topic categorization.

3.2. Image Recognition: SVM is used for image classification and object detection tasks, where it can classify images into different categories or detect objects within an image.

3.3. Bioinformatics: SVM is used in bioinformatics for tasks such as protein classification, gene expression analysis, and disease diagnosis.

3.4. Financial Forecasting: SVM is used in financial forecasting for tasks such as stock price prediction, credit scoring, and risk management.

4.1. Advantages:

4.2. Disadvantages:

5. Working of SVM in Detail

5.1. Optimization Objective: The optimization objective of SVM is to maximize the margin, which is defined as the distance between the hyperplane and the support vectors. Mathematically, this can be represented as:

argmin�,�(12∣∣�∣∣2)subject to ��(�⋅��+�)≥1 for �=1,…,�

Here, � is the weight vector perpendicular to the hyperplane, � is the bias term, �� are the feature vectors, and �� are the class labels (+1 or -1).

5.2. Kernel Trick: The kernel trick is a powerful concept in SVM that allows it to handle nonlinear classification tasks. Instead of explicitly mapping the input features into a higher-dimensional space, the kernel function computes the dot product of the mapped vectors efficiently. This avoids the computational cost of explicitly transforming the features.

5.3. Types of Kernels:

6. SVM for Regression

While SVM is widely known for classification, it can also be used for regression tasks. In SVM regression, the goal is to fit as many instances as possible within a given margin while minimizing the margin violation. The optimization objective for SVM regression can be represented as:

argmin�,�,�(12∣∣�∣∣2+�∑�=1�(��+��∗))

Subject to:

Here, �� and ��∗ are slack variables, and � is the regularization parameter.

In conclusion, Support Vector Machine (SVM) is a powerful algorithm for classification and regression tasks, particularly effective in high-dimensional spaces. Its ability to find the optimal hyperplane while maximizing the margin makes it a popular choice for various machine learning applications.

You must be logged in to post a comment.

WELCOME TO SOFTDOZE.COM

Softdoze.com is a technology-focused website offering a wide range of content on software solutions, tech tutorials, and digital tools. It provides practical guides, reviews, and insights to help users optimize their use of software, improve productivity, and stay updated on the latest technological trends. The platform caters to both beginners and advanced users, delivering useful information across various tech domains.

Elon Musk’s X fails bid to escape Australian fine Elon Musk’s X on Friday lost a legal bid to avoid a $417,000 fine levelled by

Facebook, one of the original social media networks Facebook, one of the original social media networks, has become known as the platform of parents and

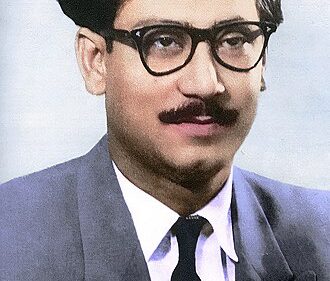

Bangabandhu Sheikh Mujibur Rahman Bangabandhu Sheikh Mujibur Rahman Born: March 17, 1920, Tungipara, Gopalganj, British India (now Bangladesh) Died: August 15, 1975, Dhaka, Bangladesh Role:

হারানো-নষ্ট হয়ে যাওয়া ড্রাইভিং লাইসেন্স উত্তোলন হারানো ড্রাইভিং লাইসেন্স উত্তোলন করার উপায়। আমাদের মাঝে অনেকেই আছেন যারা ড্রাইভিং লাইসেন্স হারিয়ে ফেলছেন অথবা নষ্ট করে ফেলছেন।

Submit a Comment