Elon Musk’s X fails bid to escape Australian fine

Elon Musk’s X fails bid to escape Australian fine Elon Musk’s X on Friday lost a legal bid to avoid a $417,000 fine levelled by

The K-Nearest Neighbors (KNN) algorithm is a simple yet powerful supervised machine learning algorithm used for classification and regression tasks. It is a non-parametric and instance-based learning algorithm, meaning it does not make any assumptions about the underlying data distribution and stores instances of the training data itself to make predictions. In this article, we will explore the KNN algorithm in detail, including how it works, its implementation using Python, and its strengths and weaknesses.

How KNN Works

The KNN algorithm works based on the assumption that similar data points are close to each other in the feature space. To make a prediction for a new data point, the algorithm calculates the distance between that point and all other points in the training dataset. It then selects the K nearest data points (neighbors) based on the calculated distances. Finally, it assigns the majority class label (for classification) or the average value (for regression) of these K neighbors to the new data point.

Implementation of KNN Algorithm in Python

Let’s implement the KNN algorithm for a classification task using Python and the popular machine learning library scikit-learn. First, we’ll import the necessary libraries and load a sample dataset for demonstration.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

knn = KNeighborsClassifier(n_neighbors=3)

knn.fit(X_train, y_train)

y_pred = knn.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(“Accuracy:”, accuracy)

In this example, we use the Iris dataset, which contains samples of three different species of iris flowers, and we aim to predict the species based on the flower’s sepal and petal measurements. We split the dataset into training and testing sets, standardize the features, initialize the KNN classifier with n_neighbors=3, train the classifier on the training set, and finally, make predictions on the test set and calculate the accuracy of the model.

Strengths of KNN Algorithm

Weaknesses of KNN Algorithm

Conclusion

The K-Nearest Neighbors algorithm is a simple yet powerful machine learning algorithm that can be used for classification and regression tasks. It is easy to understand and implement, making it ideal for beginners. However, it has its limitations, such as computational complexity, memory usage, and sensitivity to noise and outliers. Despite these limitations, KNN remains a popular choice for many machine learning tasks due to its simplicity and effectiveness.

You must be logged in to post a comment.

WELCOME TO SOFTDOZE.COM

Softdoze.com is a technology-focused website offering a wide range of content on software solutions, tech tutorials, and digital tools. It provides practical guides, reviews, and insights to help users optimize their use of software, improve productivity, and stay updated on the latest technological trends. The platform caters to both beginners and advanced users, delivering useful information across various tech domains.

Elon Musk’s X fails bid to escape Australian fine Elon Musk’s X on Friday lost a legal bid to avoid a $417,000 fine levelled by

Facebook, one of the original social media networks Facebook, one of the original social media networks, has become known as the platform of parents and

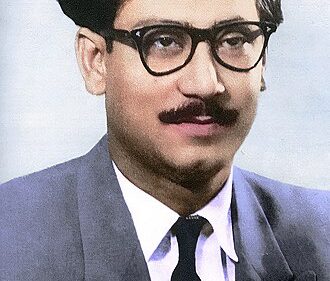

Bangabandhu Sheikh Mujibur Rahman Bangabandhu Sheikh Mujibur Rahman Born: March 17, 1920, Tungipara, Gopalganj, British India (now Bangladesh) Died: August 15, 1975, Dhaka, Bangladesh Role:

হারানো-নষ্ট হয়ে যাওয়া ড্রাইভিং লাইসেন্স উত্তোলন হারানো ড্রাইভিং লাইসেন্স উত্তোলন করার উপায়। আমাদের মাঝে অনেকেই আছেন যারা ড্রাইভিং লাইসেন্স হারিয়ে ফেলছেন অথবা নষ্ট করে ফেলছেন।

Submit a Comment